Ethical Concerns: AI’s Growing Role in Our Lives

Artificial Intelligence (AI) and Machine Learning (ML) are everywhere, often working behind the scenes in ways we don’t even notice. Whether it’s your phone’s smart assistant telling you the weather, a music app suggesting your next favorite song, or a self-driving car, AI has paved its way into the fabric of our daily lives. But here’s a question to ponder: just because AI can do all these amazing things, should it always be free to do so without limits? Understanding AI’s ethical challenges isn’t just something for tech experts to think about—it affects all of us. Why? Because AI is making decisions that impact everything from who gets hired to how safe we are on the roads. If we don’t stop to think about the consequences, we risk creating technology that could cause more harm than good.

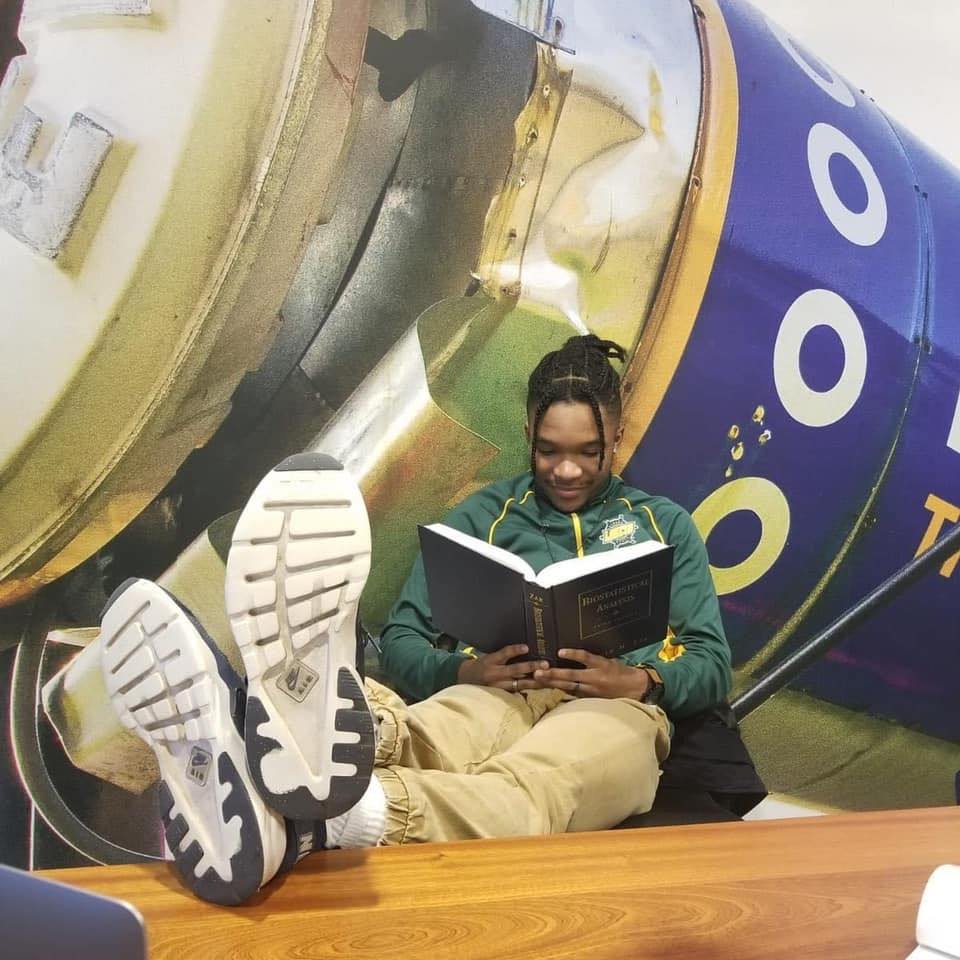

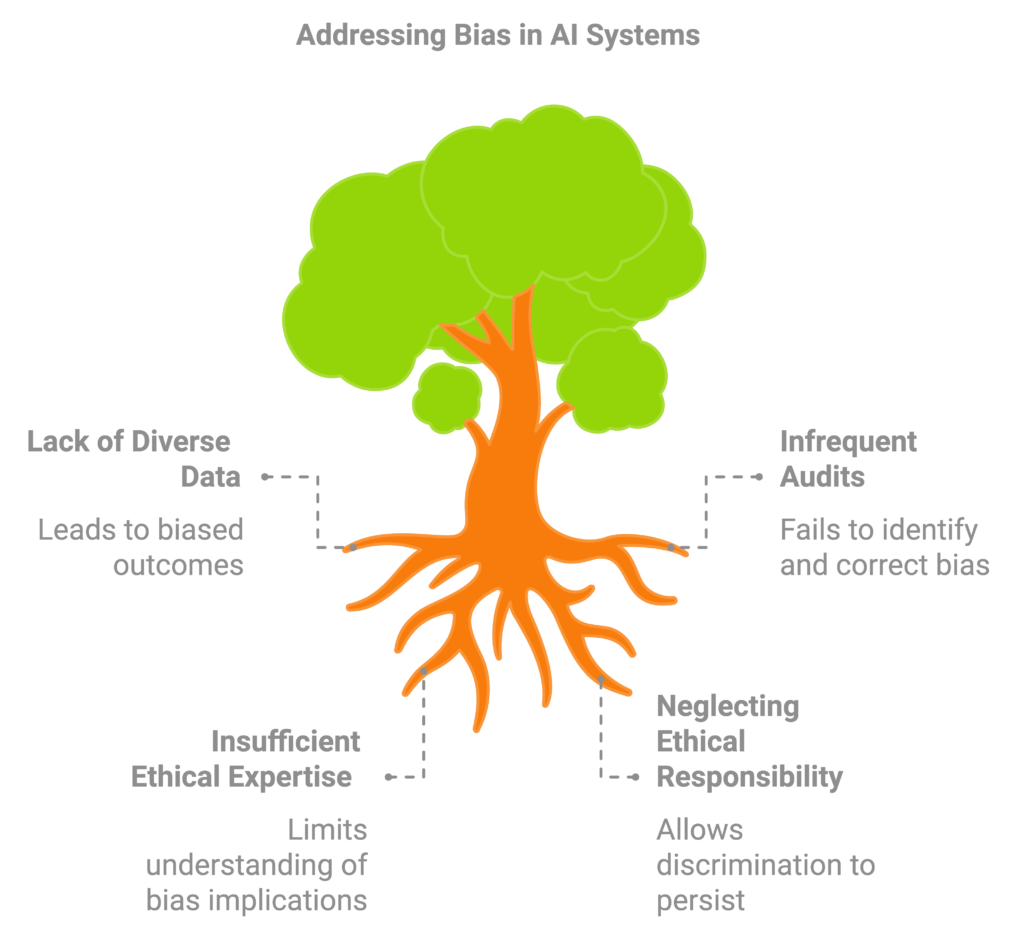

The Bias in AI: A Hidden Challenge

Now, let’s get into one of AI’s biggest ethical challenges: Bias. We tend to think of AI systems as neutral, right? After all, it’s just numbers and algorithms—how could that be biased? But here’s the reality: AI can be just as biased as the data it learns from. Imagine you’re applying for your dream job, and the company uses AI to filter through applications. This is very efficient for the company, right? But then you find out the AI system is rejecting female candidates. It seems shocking, but this actually happened with Amazon’s AI hiring tool. Why? Because the tool was trained on data from a male-dominated workforce, and without realizing it, the AI began favoring men over women.

This isn’t just Amazon’s issue—it’s something we see across many industries. Why does bias in AI happen? Well, AI learns from historical data, and if that data is biased, the AI will reflect those same biases. It’s like teaching someone to make decisions based entirely on the past without ever questioning if the past was fair to begin with. So how do we fix this? It starts with being intentional about the data we use. We need diverse, representative data when building AI models, and we can’t just set it and forget it. Regular audits are key to making sure these systems stay fair over time. And it’s not just a technical issue—it’s an ethical one. We need to involve ethicists and people who specialize in asking the tough questions, ensuring that AI doesn’t just get the job done, but does it in a way that’s fair to everyone.

Privacy Concerns: How Safe Is Your Data?

That brings us to something that hits even closer to home for all of us: Privacy. Have you ever felt a bit creeped out when an ad for something you just Googled suddenly pops up on your social media feed? Maybe you were looking up vacation spots, and now every website is showing you hotel deals. While it’s convenient, it’s also unsettling. How much of your personal data is floating around out there, and who’s using it? The truth is, most of us don’t really know. And that’s where the ethical concerns start piling up.

Think back to the Cambridge Analytica scandal—millions of Facebook users had their data harvested without their consent and used to influence political campaigns. It was a wake-up call for many of us. We often give away our data without a second thought, but once it’s out there, it’s hard to control how it’s used. Who owns your data, and how do we ensure it’s handled responsibly? While regulations like the European Union’s GDPR are helping set the rules for protecting privacy, companies need to go further. It’s not just about following the law—it’s about earning trust. Companies should anonymize data and give users more control over what they share. The goal should be clear: a future where privacy isn’t a luxury, but a standard.

Accountability in AI: Who’s Responsible?

But privacy is just the beginning. What happens when AI makes a mistake? Who’s responsible? That brings us to Accountability. Imagine you’re riding in a self-driving car. Out of nowhere, the car crashes. But since no one was driving, who do we hold accountable? Is it the manufacturer, the software developer, or maybe even the passenger who wasn’t controlling the vehicle? AI systems can feel like “black boxes”—so complex that even the people who created them can’t fully explain how they make decisions. So when something goes wrong, it’s often unclear who’s responsible.

Take the tragic example of the Boeing 737 Max. The plane’s software “MCAS”, played a role in two catastrophic crashes. The problem? Pilots weren’t given enough information about how the automated system worked. When things went wrong, the system took control in ways that led to disaster. This raises a tough question: who’s accountable when humans and AI are working together? AI systems need to be transparent—people need to understand how decisions are made. We also need clear accountability guidelines so that if something goes wrong, it’s easy to figure out who’s responsible and ensure that accountability isn’t overlooked.

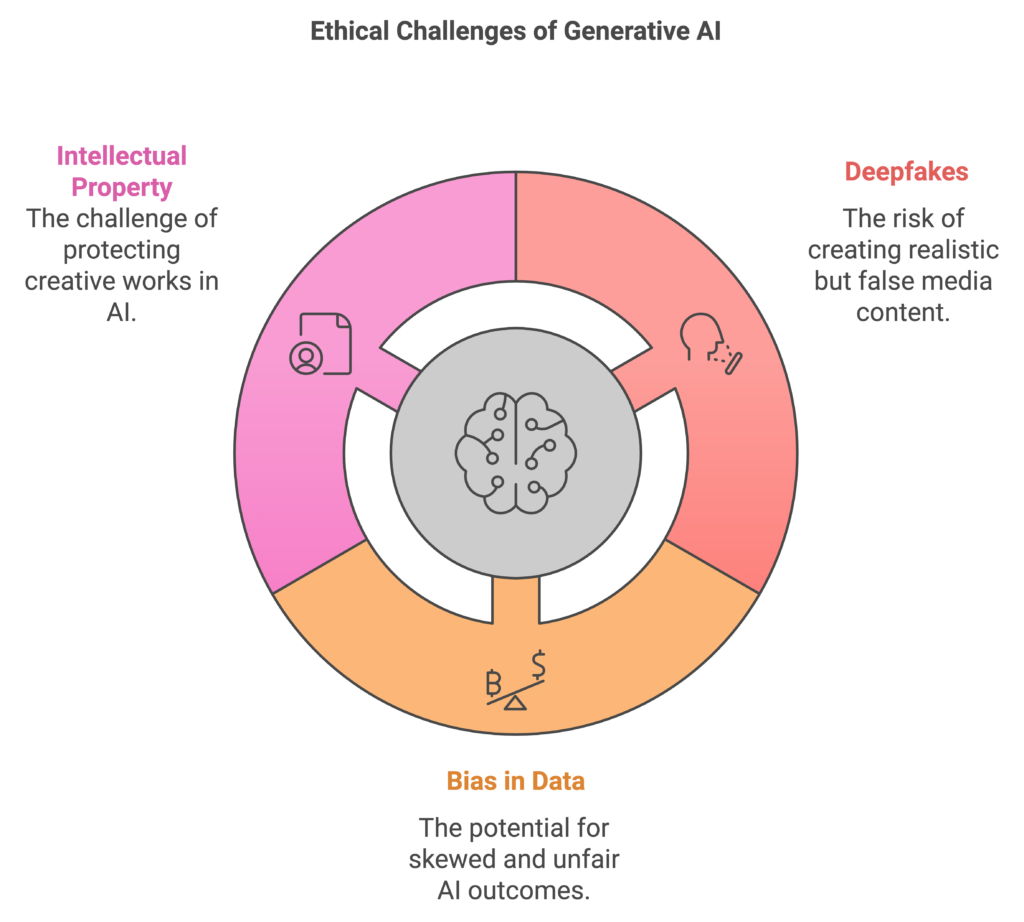

Generative AI: New Risks and Ethical Challenges

And that brings us to a different kind of AI that’s been making waves lately: Generative AI. Tools like ChatGPT can generate human-like text, create artwork, and even write music. You’ve probably seen examples of it all over the internet. But while these tools can be incredibly powerful, they also come with significant ethical risks.

For instance, deepfakes—realistic, AI-generated videos that show people saying or doing things they never actually did—are one of the darker sides of generative AI. Imagine a video of a world leader making a controversial statement, only to later find out that it was completely fabricated. These kinds of deepfakes can destroy reputations, spread misinformation, and cause chaos. And it doesn’t stop there. Generative AI learns from vast amounts of internet data, which can include biased or harmful content. If the data used to train these models contains stereotypes or biases, the AI could inadvertently reinforce and spread them.

Another issue is intellectual property. Who owns the content generated by AI? Is it the person who provided the input, the developer who created the tool, or is it just floating out there in legal limbo? As AI-generated content becomes more widespread, these questions are becoming harder to ignore.

So, what do we do about it? The answer lies in transparency. People need to know when they’re interacting with AI, and companies need to be open about how their systems work. Regular audits should be performed to check for bias, and users need to be aware of the potential risks of relying on AI-generated content. We can’t afford to ignore these issues, especially as AI continues to evolve.

Ethical Decision-Making: The Trolley Problem of AI

To tie it all together, let’s look at a classic thought experiment: the Trolley Problem. Imagine you’re in a self-driving car, and suddenly the car has to make a decision—swerve to avoid hitting a pedestrian but risk the lives of the passengers, or stay on course and hit the pedestrian to protect the people inside the car. What should the AI do? There’s no easy answer, but it highlights why ethical decision-making is so crucial in AI development.

Conclusion: Building an Ethical Future for AI

At the end of the day, the ethical challenges surrounding AI—whether it’s bias, privacy, accountability, or the rise of generative tools—aren’t going anywhere. But that doesn’t mean they’re unsolvable. By bringing together diverse voices—ethicists, policymakers, data scientists, and everyday users—we can build AI that works for everyone. It’s about asking the right questions, staying curious, and not being afraid to have tough conversations.

So the next time you interact with AI—whether it’s through a chatbot, a recommendation system, or a self-driving car—ask yourself: Who made this decision? Is it fair? And who’s accountable if something goes wrong? The more we reflect on these questions, the better equipped we’ll be to shape a future where AI not only amazes us with its capabilities but also serves us in an ethical, responsible way. Personally, I rely on AI every day, especially when tackling complex topics that I’m trying to learn and understand or studying for some exam—AI has become my go-to resource to efficiently prepare and understand these things.